Undress AI Mod APK: Understanding The Buzz, The Tech, And Your Digital Footprint

There's a lot of talk these days about artificial intelligence, especially when it comes to creating images. You might have heard whispers, perhaps even seen discussions, about tools like an "undress AI mod APK." This kind of software, it seems, gets a lot of people curious, and frankly, it raises some pretty big questions about what's possible with technology and what that means for everyone's privacy. So, it's almost time to really look at what this all means.

People are naturally drawn to what's new and what seems to push boundaries, and AI image generation certainly fits that description. The idea of an AI being able to change pictures in significant ways, like altering clothing, well, that's something that catches attention. It brings up a mix of wonder about the technology and a good deal of concern about how it could be used, or rather, misused. Many people, you know, are trying to figure out how these things work.

This discussion about an "undress AI mod APK" is not just about a piece of software; it’s about the broader implications of AI in our daily lives. It touches on important topics like digital ethics, personal boundaries, and the very real risks that come with powerful tools if they fall into the wrong hands or are used without thought. We will, in a way, go over these points very carefully.

- Peanut The Most Ugliest Dog

- Assertive Meaning

- Nagi Tall

- Whats The Name Of Luke Combs S Bar

- Somali Wasmo Channels

Table of Contents

- What is an "Undress AI Mod APK"?

- The Technology Behind It

- Why People Look for Mod APKs

- The Risks of Unofficial Apps

- Ethical Concerns and Privacy

- Legal Perspectives

- Responsible AI Use

- Legitimate AI Image Tools

- Frequently Asked Questions

What is an "Undress AI Mod APK"?

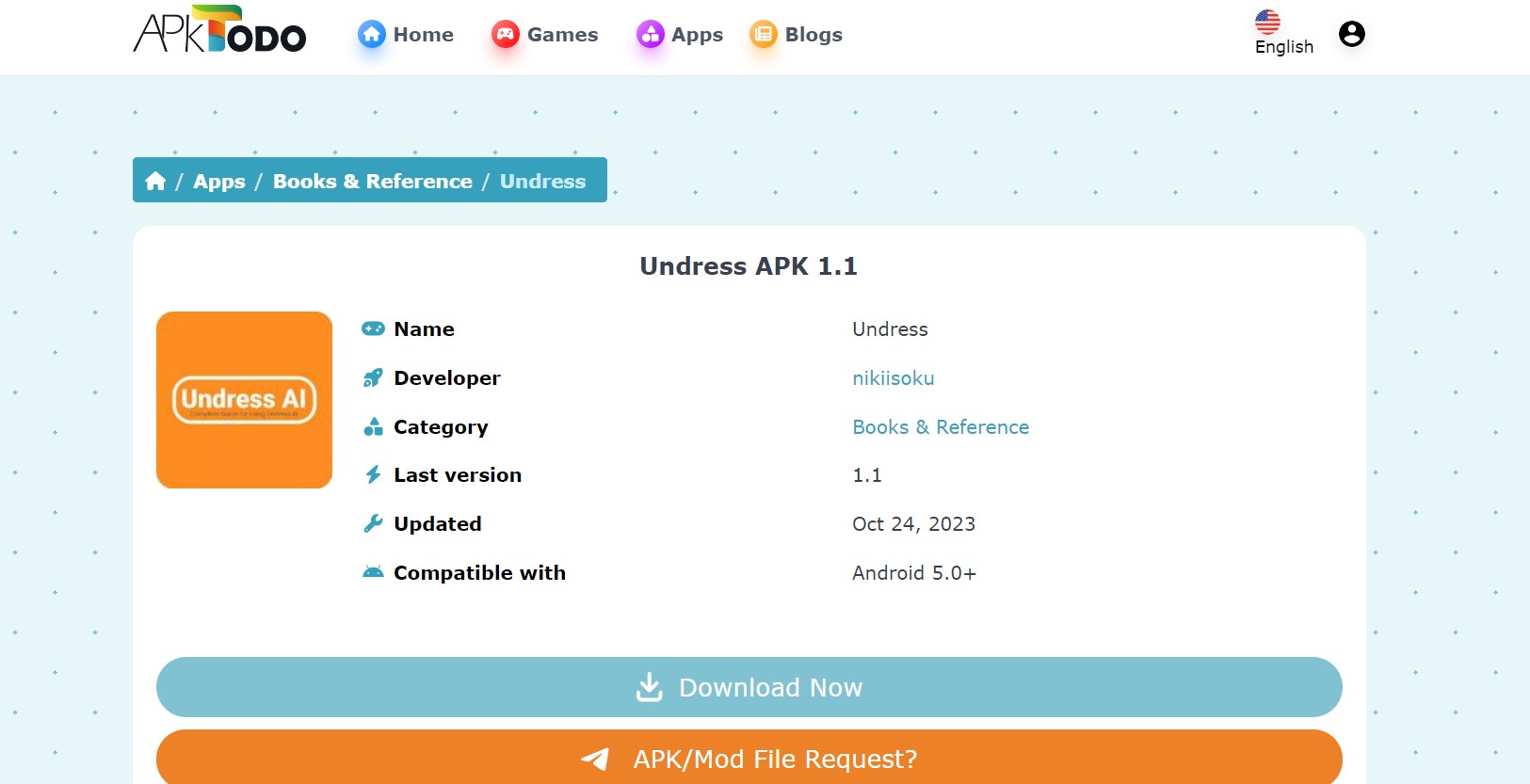

When people talk about an "undress AI mod APK," they are typically referring to a modified version of an Android application that supposedly uses artificial intelligence to remove or alter clothing in images. An "APK" is the package file format used by the Android operating system for the distribution and installation of mobile apps. A "mod APK," for instance, is a version of an app that has been altered from its original form, usually by third parties, to add features, remove restrictions, or bypass payment requirements. So, it's basically a custom version.

The "undress AI" part suggests that the core function involves an AI algorithm trained to recognize and change parts of an image, specifically clothing. This kind of AI, typically, works by using complex algorithms that have processed vast amounts of image data. The goal is to make the AI understand what clothing looks like and how to generate an image that looks like it has been removed or changed. It's a rather intricate process, actually.

It's important to understand that these modified apps are not official releases from legitimate developers. They are created and distributed outside of official app stores, which, by the way, carries its own set of concerns. People might seek these out because they promise certain capabilities that official apps do not offer, or they aim to provide premium features for free. This is, you know, a common reason for their popularity.

- Funeral Finance Bellevue

- Early Entry California Adventure

- Robert Downey Jr Charlie Chaplin

- Undress Ai On Telegram

- How Old Would Tupac Be

The Technology Behind It

The AI technology that makes image manipulation possible, like what's suggested by an "undress AI mod APK," often relies on something called generative adversarial networks, or GANs, and more recently, diffusion models. These are sophisticated types of artificial intelligence that are really good at creating new data, like images, that look very real. It's a bit like an artist that has learned from countless examples, so it can create new ones. This technology, you know, is quite advanced.

GANs work by having two neural networks compete against each other: a generator and a discriminator. The generator tries to create realistic images, and the discriminator tries to tell if an image is real or fake. Through this constant back-and-forth, the generator gets better and better at producing convincing images. Diffusion models, on the other hand, start with random noise and gradually refine it into a clear image, guided by text prompts or other inputs. They are, in a way, very powerful tools.

For an AI to "undress" an image, it would need to have been trained on a massive dataset that includes images of people with and without clothing, or perhaps images of clothing and human anatomy. This training allows the AI to learn the patterns and relationships between these elements. When given a new image, the AI uses its learned knowledge to predict and generate what the underlying body might look like if the clothing were not there. It's a complex task, and the results, you know, can vary.

The effectiveness of such an AI depends heavily on the quality and diversity of its training data. If the data is biased or insufficient, the AI's output might be unrealistic, distorted, or even reflect harmful stereotypes. So, you know, the training data is incredibly important. The way these systems operate is, quite frankly, a marvel of modern computing, yet they also carry significant ethical weight. It's a very interesting area, truly.

Why People Look for Mod APKs

There are several reasons why someone might go looking for a "mod APK," including one that promises AI image alterations. One of the main draws is usually the idea of getting features that are locked behind a paywall in the official app, or perhaps features that simply aren't available at all. People, it seems, often want to try out advanced capabilities without having to pay for them. This is, you know, a common desire.

Another reason is the appeal of bypassing geographical restrictions or device limitations. Sometimes, an app might not be available in a certain country, or it might not run on an older phone. A modified version might, in some cases, remove these barriers, allowing more people to access the software. It's a way, more or less, to get around official channels.

Then there's the curiosity factor. When a technology like AI image generation gains buzz, people become eager to experiment with it. If official, ethical tools are complex or require subscriptions, a "mod APK" might seem like a quick and easy way to try out the technology firsthand. They just want to see what it can do, you know. This drive to explore new tech is a powerful motivator for many.

However, it's important to remember that seeking out and using these modified apps comes with a set of considerable risks. The perceived benefits often come with hidden costs, which we will talk about a little more. The allure of free or unrestricted access can, you know, blind people to the potential dangers involved. It's something to think about, really.

The Risks of Unofficial Apps

Downloading and installing a "mod APK," especially one from an unknown source, carries significant risks for your device and your personal information. When you get an app from outside official app stores like Google Play, you lose the security protections and vetting processes that those platforms provide. So, you know, you're on your own, more or less.

One of the biggest dangers is malware. Modified apps are often tampered with by malicious actors who embed viruses, spyware, or ransomware into the code. These harmful programs can steal your personal data, like passwords and banking information, or even lock your device until you pay a ransom. It's a very real threat, actually, that can have serious consequences.

Another concern is data privacy. An unofficial app might be designed to collect your personal information without your knowledge or consent. This could include your location, contacts, photos, or even your browsing history. This data can then be sold to third parties or used for identity theft. So, it's a pretty big privacy concern, you know, that many people don't think about.

Furthermore, these apps are often unstable and might not work correctly. They could crash frequently, cause your device to slow down, or even damage your operating system. There's no customer support, no updates, and no guarantee that the app will perform as advertised. It's a bit of a gamble, in a way, when you choose to install them. This lack of reliability can be very frustrating, too.

Finally, using modified apps can put you at risk of legal issues, especially if the app involves copyright infringement or unethical activities. Software developers put a lot of work into their creations, and using a modified version often violates their terms of service or intellectual property rights. It's something that, you know, could lead to trouble. So, it's really important to be careful.

Ethical Concerns and Privacy

The discussion around tools like an "undress AI mod APK" quickly brings us to some very serious ethical questions, particularly concerning privacy. The ability of AI to generate or alter images of people, especially in a way that creates non-consensual intimate imagery, is deeply troubling. It challenges our basic expectations of personal privacy and digital safety. This is, you know, a very important point.

The core issue is that these technologies can be used to create images that appear real but are entirely fabricated. When such images depict individuals without their permission, it represents a severe violation of their autonomy and dignity. It's a bit like someone creating a false story about you and then making everyone believe it. This kind of manipulation can cause immense harm, actually.

Think about the potential for these images to be shared online, perhaps without any control once they are out there. The internet has a way of making things permanent, and once an image is distributed, it can be nearly impossible to remove completely. This creates a lasting digital footprint that can haunt individuals for a very long time. It's a rather frightening thought, really.

Moreover, the existence of such tools contributes to a climate where trust in digital media erodes. If we can no longer tell what is real and what is fake, it becomes harder to believe what we see online, which has broader societal implications. This is, you know, a big problem for everyone. It means we have to be more careful than ever about what we consume and share.

Consent and Personal Boundaries

At the heart of the ethical debate about AI image manipulation, particularly with an "undress AI mod APK," is the fundamental principle of consent. Every person has a right to control how their image is used, especially when it comes to intimate or revealing depictions. When AI is used to create such images without a person's explicit permission, it is a clear violation of their personal boundaries. This is, you know, a very basic right.

The act of creating or sharing non-consensual intimate imagery, even if it's AI-generated, can be deeply traumatizing for the individual depicted. It can lead to feelings of shame, humiliation, and a profound sense of betrayal. The psychological impact can be severe and long-lasting, affecting mental health and relationships. It's a very serious form of abuse, actually, that often goes unseen.

It's important to understand that just because a technology exists, it does not mean it should be used without ethical consideration. The ease with which AI can generate these images does not lessen the harm they cause. In fact, it might even amplify it, making it easier for malicious actors to target individuals. So, it's something that really needs to be thought about carefully.

Upholding consent means respecting an individual's right to privacy and their bodily autonomy. It means recognizing that every person has the right to decide what is done with their image, and that includes any alterations made by artificial intelligence. This principle, you know, should guide all our interactions with technology. It's a matter of basic human respect, truly.

The Spread of Misinformation

Beyond the direct harm to individuals, AI tools that can manipulate images, like what an "undress AI mod APK" purports to do, contribute to a wider problem of misinformation. When it becomes difficult to distinguish between real and AI-generated images, the public's ability to trust visual information is compromised. This, in a way, can have far-reaching consequences for society. We see this, you know, in many areas.

Fabricated images can be used to spread false narratives, discredit individuals, or even influence public opinion on important matters. Imagine a fake image of a public figure saying or doing something they never did; this could cause widespread confusion and damage. It's a very powerful tool for deception, actually, that can be used for various harmful purposes.

The ease of creating these images means that anyone with access to the technology can potentially become a creator of misinformation. This democratizes the ability to deceive, making it harder for institutions and individuals to verify the authenticity of visual content. So, it's a pretty big challenge, you know, for everyone who relies on accurate information. This is a problem that, sadly, seems to be growing.

Combating this requires a collective effort: media literacy education, the development of robust detection tools, and a commitment from platforms to identify and remove harmful deepfakes. It's a constant battle, but one that is essential for maintaining a healthy information environment. We need to, you know, be very vigilant about these things. The future of truth itself, arguably, depends on it.

Emotional and Reputational Harm

The creation and spread of non-consensual intimate imagery, even if AI-generated, can inflict severe emotional and reputational harm on the individuals targeted. For the person depicted, discovering that their image has been used in such a way can lead to intense feelings of violation, shame, and helplessness. It's a very personal attack, actually, that can feel deeply invasive. They might, you know, feel like their privacy has been completely shattered.

The reputational damage can be equally devastating. Once these images are online, they can be difficult to remove, and they can impact a person's professional life, personal relationships, and overall standing in their community. The stigma associated with such imagery can follow an individual for years, regardless of whether the images were real or fabricated. So, it's a pretty big deal, you know, for someone's life.

Victims often experience anxiety, depression, and post-traumatic stress. The feeling of having one's body or image exploited without consent is a profound violation that can erode a person's sense of safety and trust. It's a very difficult experience to go through, truly, and it requires a lot of support. This kind of harm is, in a way, very insidious because it attacks a person's identity.

Society needs to recognize the gravity of this harm and treat AI-generated non-consensual intimate imagery with the same seriousness as other forms of digital abuse. Support systems for victims, legal recourse, and public awareness campaigns are all crucial in addressing this growing problem. We need to, you know, do more to protect people. Learn more about AI ethics on our site, and link to this page Understanding AI Ethics.

Legal Perspectives

The legal landscape surrounding AI-generated content, especially non-consensual intimate imagery like what an "undress AI mod APK" might create, is still developing, but many jurisdictions are beginning to enact specific laws. In many places, creating or distributing such images without consent is illegal, regardless of whether the image is real or fabricated. This is, you know, a very important legal point.

Laws often focus on the act of creating or sharing the image with the intent to harm, harass, or exploit. The fact that an image is AI-generated does not typically provide a legal shield for the perpetrator. Courts are increasingly recognizing the severe harm caused by these deepfakes and are applying existing laws or creating new ones to address them. So, it's a pretty serious offense, actually, in many places.

For example, some countries and states have passed "revenge porn" laws that cover the non-consensual sharing of intimate images. These laws are often being expanded to include digitally altered or synthetic images. There are also laws related to defamation, harassment, and identity theft that could apply. It's a complex area, in a way, with many different legal angles.

Individuals who create or distribute such content could face significant penalties, including fines, imprisonment, and civil lawsuits from the victims. The legal consequences can be very severe, and ignorance of the law is generally not considered an excuse. So, it's something that, you know, people should be very aware of before they even think about using such tools. It really is a big deal, legally speaking.

It's always best to consult with legal professionals for specific advice regarding the laws in your region, as they can vary greatly. The legal system is, you know, trying to catch up with the rapid pace of technological change. This area of law, too, is constantly evolving.

Responsible AI Use

Given the powerful capabilities of AI in image generation, it becomes really important for everyone to think about responsible use. This means understanding the ethical implications of the technology and making choices that respect privacy, consent, and digital safety. It's about, you know, using these tools wisely and with care. We all have a part to play, apparently.

For developers, responsible AI use involves building safeguards into their systems to prevent misuse, ensuring transparency about how their AI is trained, and actively working to detect and mitigate harmful outputs. It's about designing technology with ethics in mind from the very beginning. This is, in a way, a crucial step for the future of AI. So, it's very important that they get it right.

For users, responsible AI use means being mindful of the content you create and share, especially when it involves images of people. Always obtain explicit consent before using someone's likeness in AI-generated content, and never create or disseminate images that could cause harm or violate privacy. It's a matter of, you know, showing respect for others. Think before you click, really.

It also means being critical consumers of AI-generated content. Develop your media literacy skills to help you identify deepfakes and question the authenticity of images you encounter online. If something looks too good to be true, or if it evokes a strong emotional reaction, it's often worth a second look. This is, you know, a good habit to cultivate. Staying informed is, actually, a key part of this.

Ultimately, responsible AI use is about fostering a digital environment where innovation can thrive without compromising human dignity or safety. It's a shared responsibility that requires ongoing dialogue, education, and ethical consideration from everyone involved. This is, you know, a continuous process. We are, in some respects, just at the beginning of this conversation.

Legitimate AI Image Tools

While discussions around "undress AI mod APK" highlight the potential for misuse, it's important to remember that many legitimate and ethical AI image generation tools exist. These tools are designed for creative expression, artistic exploration, and practical applications, all while adhering to ethical guidelines and respecting user consent. They offer, you know, amazing possibilities for artists and creators.

For instance, tools like Midjourney, DALL-E, and Stable Diffusion allow users to create stunning images from text prompts, generate unique artwork, or even assist with design projects. These platforms often have built-in safeguards to prevent the creation of harmful or explicit content, and they prioritize

- Canadas Got Talent Episode Season 1 Episode 22

- This Writing Is Fire

- Artie Lange Net Worth

- Who Is Gretchen Married To Now

- Muggsy Bogues Dunk

Undress AI Free Tools App Download APK Latest Version

Undress AI Free Tools App Download APK Latest Version

Undress AI Free Tools App Download APK Latest Version